Thursday, September 28, 2006

With one day delay, I'm now able to make the video of Aaron's talk available for everyone to download. Here is the link: aKademy2006-Plasma.avi. Its a 136 MB download, 504x288 resolution, XviD encoded. Enjoy!

Monday, September 25, 2006

Goodbye Dublin!

So now my time in Dublin is over. Unfortunatly i had no time to finish yet another transcript, so you will have to wait until I'm back home. Perhaps i may prepare the next transcript at the airport, so i could publish it faster, but who knows...

Having found my bus connection to the airport i have to say goodbye and walk to the central bus station. Hope that this time checking in will be not so problematic as in Frankfurt... :-)

Having found my bus connection to the airport i have to say goodbye and walk to the central bus station. Hope that this time checking in will be not so problematic as in Frankfurt... :-)

Time to eat something

We will make a photo shooting at 2pm, and i want to eat something beforehand. So i will now make a small break and get something to eat. I still have some transcripts on my disk: KDevelop, KickOff and the C# bindings talk. - Stay tuned!

aKademy 2006: Performance techniques

This talk was held by our optimizer, Lubos Lunak.

KDE Performance

As you see we have to compare us with something of our power:

Is not that bad either

So, no need to be very nervous, but we can still improve

KDE's performance is bad because:

What to do

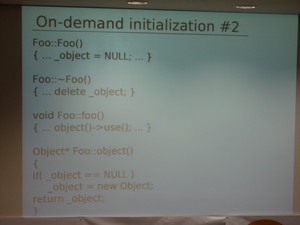

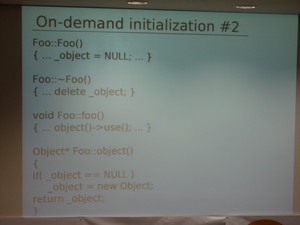

On-demand initializtion

Caching

Don't do the same ting over and over again. Save the result somewhere, check that the input hasnt changed. (e.g ksycoca)

We do many things during startup of every kde application:

kconfig, qsettings are rather inefficient, some things are repeated by every application.

Bottom line: We need to make more use of caching!

I/O performance #1

Time for this years quiz:

Which is faster?

=> Disk seeks are very slow (~10ms for every seek)

I/O performance #2

Other tips: Cheat! - Seriously :) Show progress (use a splashscreen, show messages or whatever), provide early feedback, optimize the common case, optimize big bottlenecks.

Many small things add up, we are large and complex.

Time goes on,this kde wil never rund on 16 MB RAM.

We need to compare with comparable competition (We are never a match for xedit or WindowMaker)

We have to live with that. There is a limit that cannot reasonably be reached without significantly reducing features. We could cut the things that slow us down, but would have to sacrifice many features.

Would that be still KDE?

Some question which where raised afterwards:

Question: When we switch to SVG-icons, how can we make sure that the performance stays ok?

Answer: We need to cache the generated pngs and probably put them into one big file, to improve the "many small files" situation. (Gnome already does that).

Someone talks about using the Qt Resource system to load arbitrary data (like icons etc.) into one library and then have the kde4 application link to that and get the whole data faster, because it should already be in memory. Lubus Lunak did not like that idea that much because of the overhead of a library. He prefers to create specialized cache files, and that should be even faster.

Personal comment: This was very interesting, and i hope that KDE4 can do something in the "many small files"-area, and create cache files containing all icons of the current icon set, or caching the configuration of the user into one big file, etc.

KDE Performance

- Is not that good

- Windows 95 is so much faster

- and dont let me get started on JetPac

As you see we have to compare us with something of our power:

Is not that bad either

- we are not noiceable worse thatn comparable competition

- in fact, we are even often better

- theres incomparable competition

So, no need to be very nervous, but we can still improve

KDE's performance is bad because:

- Some libraries we use are bad

- dynamic linker (shared libs) is bad (prelinking does not help much)

- I/O performance is bad

- really stupid mistakes are bad (e.g. he noticed that kicker would easily use 16 MB of memory, then he found out that the pager which could show the background in the pager cashed all background images in the original size, not the scaled down size)

- many small things add up

- nice things are sometimes not fast (kconfig is nice, but it is rather slow to parse all the text-files)

- unneeded things are done (sometime ago kate created the find and replace dialog on startup)

- initial resource usage is large because our framework is large (libraries, but that pays off when you start more applications)

What to do

- find the problem

- analyze the problem

- do NOT guess

- measure

- verify assumptions

- speed: cachegrind, sysprof

- memory: exmap, xrestop, kmtrace

- fix the problem

On-demand initializtion

Caching

Don't do the same ting over and over again. Save the result somewhere, check that the input hasnt changed. (e.g ksycoca)

We do many things during startup of every kde application:

kconfig, qsettings are rather inefficient, some things are repeated by every application.

Bottom line: We need to make more use of caching!

I/O performance #1

Time for this years quiz:

Which is faster?

=> Disk seeks are very slow (~10ms for every seek)

I/O performance #2

- Try to avoid many small files

- create single cache file at build time

- create a single cache file at runtime

- dont forget watching for changes

- on demand loading (konsole is loading all color-schemes on startup, although that would not be neccessary)

- kernel could(?) help

Other tips: Cheat! - Seriously :) Show progress (use a splashscreen, show messages or whatever), provide early feedback, optimize the common case, optimize big bottlenecks.

Many small things add up, we are large and complex.

Hundred times nothing tormented the donkey to death"slovak proverb"

Time goes on,this kde wil never rund on 16 MB RAM.

We need to compare with comparable competition (We are never a match for xedit or WindowMaker)

We have to live with that. There is a limit that cannot reasonably be reached without significantly reducing features. We could cut the things that slow us down, but would have to sacrifice many features.

Would that be still KDE?

Some question which where raised afterwards:

Question: When we switch to SVG-icons, how can we make sure that the performance stays ok?

Answer: We need to cache the generated pngs and probably put them into one big file, to improve the "many small files" situation. (Gnome already does that).

Someone talks about using the Qt Resource system to load arbitrary data (like icons etc.) into one library and then have the kde4 application link to that and get the whole data faster, because it should already be in memory. Lubus Lunak did not like that idea that much because of the overhead of a library. He prefers to create specialized cache files, and that should be even faster.

Personal comment: This was very interesting, and i hope that KDE4 can do something in the "many small files"-area, and create cache files containing all icons of the current icon set, or caching the configuration of the user into one big file, etc.

aKademy 2006: State of KHTML

This talk was held by George Staikos.

The history of KHTML

The Safari fork was very popular (as you see on the forks)

Merging back into KHTMLr2 was not very good (big patches dumped from them)

Unity was the experiment to bring WebKit back to KDE

Why KHTML is important

KHTML is _critical_ to the success of KDE!

What we have: (He shows a slide with all the standards KHTML implements and says:)

What we dont have

KHTML - From the industry

Great alternative to Cecko and Opera - small, fast, easy to understand, standards compliant. In use in many embedded platforms as well as dekstop browsers, Safari, Nokia, Omni. But: Forking is a problem.

Currently gaining respect from tother browser vendors, could become a major player with enough "unity" >= 10% market share.

KHTMl is also gaining industry respect (Microsoft regularly contacting KHTML developers, also Firefox developers, Google etc.)

Can we complete the "merge"?

If we go Unity, What do we gain?

What do we lose?

He also wants to embrace us working more with the working groups:

Working with working groups

Discussion

Question: What was it with the performance patches done? (which are not in WebKit/Unity)

Answer: CSS optimizations by Allen, New caching done by maxim

Personal comment: I think Unity is a great idea and i think we would all benefit from going this route in the long run, although we would loose some features of the current KHTML.

The history of KHTML

The Safari fork was very popular (as you see on the forks)

Merging back into KHTMLr2 was not very good (big patches dumped from them)

Unity was the experiment to bring WebKit back to KDE

Why KHTML is important

KHTML is _critical_ to the success of KDE!

- Provides a fast, light web browser and

- component that tightly integrates with KDE

- Gives us higher visibility as a procject: "the web" is much larger than linux

- great test environment

What we have: (He shows a slide with all the standards KHTML implements and says:)

The web in general

What we dont have

- internal SVG support

- Latest NSPlugin API support

- XBL

- Content Editable

- DOM bindings to non-C++/non-JS

- Opacity (Qt4 should help)

- Lightweight widgets (lots of widgets on a page can really slow down KHTML)

KHTML - From the industry

Great alternative to Cecko and Opera - small, fast, easy to understand, standards compliant. In use in many embedded platforms as well as dekstop browsers, Safari, Nokia, Omni. But: Forking is a problem.

Currently gaining respect from tother browser vendors, could become a major player with enough "unity" >= 10% market share.

KHTMl is also gaining industry respect (Microsoft regularly contacting KHTML developers, also Firefox developers, Google etc.)

Can we complete the "merge"?

- "Merging" is not really feasible at this point

- Unity - a port of WebKit to Qt4:

- Kpart, KIO development is underway

- Can co-exist with KHTML from KDE

- Works "now"

- Abstraction layer in WebKit makes it relativley easy to port

- Open questions: How do we avaoid re-forking? How do we merge our own work?

If we go Unity, What do we gain?

- Support for many of the functions we lack as described earlier - XBL content editable, etc

- Bub for bug compatibility with many major browsers (This is important for industry acceptance, because they do not want to test their applications for gazillions of different browsers)

- More developer resources

- larger user base for testing and bug reporting (they have some great people which just create test cases for all forms of bugs)

- easier embedding in Qt-only apps

- Portability - the best win32 port?

What do we lose?

- Some possible trade offs in bug fixes and performance (work recently done by Maksim, Germain, Allan)

- Some flexibility in development model (we need to work as a team with nokia, apple, etc)

- Complete authority over the codebase

- Some functionality needs rewrite (form widgets, java applets, nsplugins, wallet, KDE integration)

He also wants to embrace us working more with the working groups:

Working with working groups

- W3C-Security (George has taken part of it)

- The W3C is constantyl approaching him and want that we be more part of it

- WhatWG - HTML5 (this is really great and we should be activly taking part of it!)

- KHTML/WebKit meetings (he takes part there all 3-4 months)

- Plugin Features (new plugin API, very important, George has no time for it)

- SVG

- Browser testing organization (Mozilla is forming this right now, we could participate, we would benefit from it greatly)

- JavaScript as standard programming language (is more and more used in MacOSX, we have KJSEmbed and are also embracing it, Plasma will use it as standard language for plasmoids for example)

Discussion

- Do we want to pursue WebKit/Unity? (if so, how do we approach it? what will the impact be on our community?)

- How do we avoid losing our own work

- How do we ensure that we are equal players in a joint effort with Apple, Nokai and others?

- How can we grow developer-interest without cannibalizing our existing developer base?

Question: What was it with the performance patches done? (which are not in WebKit/Unity)

Answer: CSS optimizations by Allen, New caching done by maxim

Personal comment: I think Unity is a great idea and i think we would all benefit from going this route in the long run, although we would loose some features of the current KHTML.

aKademy 2006: Decibel - You are not alone

This talk was held by Tobias Hunger from basysKom.

First he explained shortly that Decibel will be a real time communications framework.

What is real time communication?

Why a framework?

Which technologies exist?

Telepathy is an RT communications infrastructure: Defines DBus interfaces to access RT communication services (desktop independant, low level API, hosted at freedestkop.org)

Implementation of jabber protocol available, used in existing products. - Gnome is heading in this direction.

Connection Manager (implements protocols)

Connections are created by the connection manager (represents one connection to a server/service)

In such a connection you have one or more channels of data which may be transported over the connection (for example presence, and chat in IM)

Tapioca used to be an infrastructure competing with Telepathy. Today the implement the Telepathy spec.

The question that remains: What will decibel add to this on top?

One of Decibel central parts is Housten:

Houston consists of 3 parts:

Desktop Components are specialized applications started by houston

Status: (Or where is the code?)

He says "We are behind schedule". :-(

But we got some things done already:

There will be a Bof-Session with the Telepathy/Decibel/Kopete people to further bring this along.

Personal comment: Well this sounds really interesting but i hope that the kopete developers can agree on this and that they somehow can reuse most of the code they have written over the time for kopete.

First he explained shortly that Decibel will be a real time communications framework.

What is real time communication?

- Instant messageing

- voIP/Video conferencing Computer Telephone Integration (CTI) (Using software to autodial the telephone for example)

Why a framework?

- communications is one of the fundamental uses casess of computers

- integrated communication is a prerequisite of collaboration

Which technologies exist?

Telepathy is an RT communications infrastructure: Defines DBus interfaces to access RT communication services (desktop independant, low level API, hosted at freedestkop.org)

Implementation of jabber protocol available, used in existing products. - Gnome is heading in this direction.

Connection Manager (implements protocols)

Connections are created by the connection manager (represents one connection to a server/service)

In such a connection you have one or more channels of data which may be transported over the connection (for example presence, and chat in IM)

Tapioca used to be an infrastructure competing with Telepathy. Today the implement the Telepathy spec.

- Qt Binding for telepathy with raw DBus bindings

- convienience wrapper QTapioca

The question that remains: What will decibel add to this on top?

One of Decibel central parts is Housten:

- Provides desktop independent DBus interfaces to high level RT communication features

- Persistently stores user data (accounts, preferences etc)

- starts/stops connectionMAnagers as required

- starts/stops dekstop dependent components as required (image)

Houston consists of 3 parts:

- AccountManager (stores users account data in central place (connectivity, visibility information, privacy information)

- ProtocolManager (holds a list of connectionmanagers installed with supported protocols, selects connection manger to use for a protocol, stores preferred connectionmanagers)

- ComponentManager (is registry of components, stores the users preferences on which component is supposed to handle which kind of channel, is notified on new channel events, deicdes what to do with a new channel by deciding on which component to use to handle it)

Desktop Components are specialized applications started by houston

- desktop specific

- handle one specific task well

- work in concert with housten and other components

Status: (Or where is the code?)

He says "We are behind schedule". :-(

But we got some things done already:

- Qt bindings to Telepathy were missing (done)

- QtTapioca was launched to make wrinting applications like Houste easier (in progress)

- Housten implementation (started)

- KDE specific components (todo)

There will be a Bof-Session with the Telepathy/Decibel/Kopete people to further bring this along.

Personal comment: Well this sounds really interesting but i hope that the kopete developers can agree on this and that they somehow can reuse most of the code they have written over the time for kopete.

Sunday, September 24, 2006

aKademy 2006: Akonadi - The KDE4 PIM Framework

This talk was held by Tobias Koenig.

He started with the following:

The 3 biggest mistakes made in human computer history:

In summary he explained the following points:

Why a new PIM Framework?

Akonadi (History, Concepts, Curent State, The Future)

Problems with old framework:

Bad Performance. (All data was loaded into memory. Thats good for local files, but when accessing groupware servers this takes the application down)

Synchronous Access. (Again, no problem for local files because this is fast anyway, but problematic for remote data)

Memory Consumption.

Missing Notifications. (The other applications did not know about changes in resources other than file resources)

Missing locking. (Very problematic when you want to synchronize data, because other processes could change the data while you where synchronizing)

Akonadi:

A general storage for PIM data that aims to solve the problems of kabc/kcal with a modular design for robustness and easy integration.

The first ideas of a PIM daemon came up at Osnabrück meeting 2005. Sample code for address book daemon was created, but was not welcomed very good by developers. But one year later: General agreement for PIM service at Osnabrück meeting 2006. - "Akonadi" was born

Akonadi is a service compromised of two processes:

The storage (interfaced with IMAP) and the Control process with a DBus interface

libakonadi wraps the service and imap connection and provides an easy to use interface. Ontop of that, some small wrapper libraries like libkabc and libkcal exist. The applications will use this

libraries directly.

The storage:

The resources:

The control-process

The SearchProivders

Collections

Current State

[Demo]

Todo

The future:

Goal: Components based PIM library where you create new applications just by gluing together components. Components meant as standalone views and editors of PIM data. Plasma applets for calendar and address book are also possible.

After that a little bit heated debate arose with the general question: Why not reuse existing technologies like LDAP or something else. Also concers where raised that the SearchProviders are seperate processes, and that would hinder performance.

Personal opinion: To say it the hard way: I was a little bit disapointed of this talk, and I'm also disappointed about akonadi. Don't misunderstand me: Its still a good concept, but i think some bits are missing still and they should consider to redesign some parts of it.

He started with the following:

The 3 biggest mistakes made in human computer history:

- There will not be more then 10 computers in the world

- Nobody will need more than 640k of RAM

- Nobody will save more than 100 contacts in an adress book. (This one was made by me, 3 years ago)

In summary he explained the following points:

Why a new PIM Framework?

Akonadi (History, Concepts, Curent State, The Future)

Problems with old framework:

Bad Performance. (All data was loaded into memory. Thats good for local files, but when accessing groupware servers this takes the application down)

Synchronous Access. (Again, no problem for local files because this is fast anyway, but problematic for remote data)

Memory Consumption.

Missing Notifications. (The other applications did not know about changes in resources other than file resources)

Missing locking. (Very problematic when you want to synchronize data, because other processes could change the data while you where synchronizing)

Akonadi:

A general storage for PIM data that aims to solve the problems of kabc/kcal with a modular design for robustness and easy integration.

The first ideas of a PIM daemon came up at Osnabrück meeting 2005. Sample code for address book daemon was created, but was not welcomed very good by developers. But one year later: General agreement for PIM service at Osnabrück meeting 2006. - "Akonadi" was born

Akonadi is a service compromised of two processes:

The storage (interfaced with IMAP) and the Control process with a DBus interface

libakonadi wraps the service and imap connection and provides an easy to use interface. Ontop of that, some small wrapper libraries like libkabc and libkcal exist. The applications will use this

libraries directly.

The storage:

- Accessible via extended IMAP => high performance on data delivery (IMAP was invented for that, but until now only used for mail)

- Caches all PIM items of the resources (depending on policy, eg. groupware data is cached, local files likely not)

- Informs the control-process about changes

- Provides basic search features (IMAP)

- delegates extended search requests to SearchProviders

The resources:

- Applications wich synchronize data between the storage and an external data source (eg. groupware server or file) (This improves stability because a crashing resource does not take the whole system down)

- Asyncronous communication

- Profiles for grouping resources together (allows different views for normal viewing and synchronization)

The control-process

- Starts and monitors the Storage and resource process (resource processes are automatically restarted on crashes)

- Provides D-Bus API for managing resources and profiles

- Another library proxies this DBus-API into a library for application's use

The SearchProivders

- External applications which process more complex search queries

- One SearchProvider for every PIM item type

- Store search results in collections inside the Storage

Collections

- Virtual containers inside the Storage

- Contain PIM items/references

- Root collection "/"

- Every Resource provides at least one collection

- Easy to realize virtual folders

- /resource1

/resource1/calendar

/resource1/addressbook

/resource2

/search

Current State

- Storage (provides all needed IMAP commands), stores items in collections

- Control (lifetime managment for storage and resources already working, DBus API nearly completed)

- Resources (Simple iCaldenar file, dummy resource "Knut")

[Demo]

Todo

- Implementing SearchProviders

- Defining asynchronous client library (libakonady nearly done)

- Implementing full featured resources (remote and local file)

- Writing documentation

The future:

Goal: Components based PIM library where you create new applications just by gluing together components. Components meant as standalone views and editors of PIM data. Plasma applets for calendar and address book are also possible.

After that a little bit heated debate arose with the general question: Why not reuse existing technologies like LDAP or something else. Also concers where raised that the SearchProviders are seperate processes, and that would hinder performance.

Personal opinion: To say it the hard way: I was a little bit disapointed of this talk, and I'm also disappointed about akonadi. Don't misunderstand me: Its still a good concept, but i think some bits are missing still and they should consider to redesign some parts of it.

aKademy 2006: Multi-Head RandR

This talk was held by our all time X-hero Keith Packard.

He titled it "Hotpluggy Sweetness". Basically, they want Monitors and input devices to be fully pluggable.

First he showed the history of x and screen and how this all involved:

Core-X does support multiple screens.

When the x-server starts it detects the monitors (through DDC) and then starts all up. - After that it does not change. -Ever.

Screen info is passed at X startup. (static arrays allocated in Xlib)

Adding screens is really hard. Many resources are per-screen (Windows, Pixmaps, etc)

(Long ago screens had different capabilities (color depth etc,) but today we do not have that anymore, so much of the assumptions made earlier, dont make sense anymore)

Xinerama:

Merge monitors into one "screen". Allows applications to move from one monitor to the other.

But: Screen configuration fixed at startup. That is suitable for fixed multi-head environments, but not for todays requirements with notebooks etc.

Also: The Initial implementation was very inefficient (all applications are replicated on all graphic cards, sucks many memory).

Changing Modes

Xfree86-VidModeExtension.

Change monitor on the fly, also exposes gamma correction

But: screen-size still fixed at startup because of static configuration files, set of possible modes fixed at startup

Changing Screen Size

RandR: Resize and Rotate extension.

Allows run-time changes to screen size (fixed set of sizes and monitor modes as present in the configuration files). A mode is expressed as size and refresh only. This was the first extension to break existing applications because of the changing the screen size.

Now on for the real: Hotpluggy Sweetness

This new thing is based on three "things:

Screen - Core X screen (Accept static screen count, there need be only one)

Crtc - CRT Controller (Scan-out portion of X screen, Contains one mode [All todays graphic cards support more than one monitor, but only one mode!] )

Outputs (Drive monitors, Connected to CRTC)

Then he went on showed the commandline tool randr12 which allowed to view the current state and also change the above three things on the fly. It worked really well and looked sweet.

When beeing asked when this could be in X.Org, he said probably X.Org 7.3

Personal comment: This is really great stuff and i cant wait for X.Org 7.3 to appear!

He titled it "Hotpluggy Sweetness". Basically, they want Monitors and input devices to be fully pluggable.

First he showed the history of x and screen and how this all involved:

Core-X does support multiple screens.

When the x-server starts it detects the monitors (through DDC) and then starts all up. - After that it does not change. -Ever.

Screen info is passed at X startup. (static arrays allocated in Xlib)

Adding screens is really hard. Many resources are per-screen (Windows, Pixmaps, etc)

(Long ago screens had different capabilities (color depth etc,) but today we do not have that anymore, so much of the assumptions made earlier, dont make sense anymore)

Xinerama:

Merge monitors into one "screen". Allows applications to move from one monitor to the other.

But: Screen configuration fixed at startup. That is suitable for fixed multi-head environments, but not for todays requirements with notebooks etc.

Also: The Initial implementation was very inefficient (all applications are replicated on all graphic cards, sucks many memory).

Changing Modes

Xfree86-VidModeExtension.

Change monitor on the fly, also exposes gamma correction

But: screen-size still fixed at startup because of static configuration files, set of possible modes fixed at startup

Changing Screen Size

RandR: Resize and Rotate extension.

Allows run-time changes to screen size (fixed set of sizes and monitor modes as present in the configuration files). A mode is expressed as size and refresh only. This was the first extension to break existing applications because of the changing the screen size.

Now on for the real: Hotpluggy Sweetness

- Add/remove monitors dynamically

- Extend desktop across new monitor

- Expose full system capabilites to applications

- Blend Xinerama, Xfree86-VidModeExtension and RandR

- World Domination :-)

This new thing is based on three "things:

Screen - Core X screen (Accept static screen count, there need be only one)

Crtc - CRT Controller (Scan-out portion of X screen, Contains one mode [All todays graphic cards support more than one monitor, but only one mode!] )

Outputs (Drive monitors, Connected to CRTC)

Then he went on showed the commandline tool randr12 which allowed to view the current state and also change the above three things on the fly. It worked really well and looked sweet.

When beeing asked when this could be in X.Org, he said probably X.Org 7.3

Personal comment: This is really great stuff and i cant wait for X.Org 7.3 to appear!

aKademy 2006: Phonons in Solids

Note: I cannot get fish:// working correctly through this network (connection breaks everytime i try to upload something) so i could not upload the images mentioned in the text. So you have to live without those images. - Sorry.

This talk was held by Matthias Kretz (phonon) and Kevin Ottens (solid).

First the explained the common architecture which they both use. After that the explained solid and phonon further.

Requirements:

Solution: Frontend/Backend split (Loose coupling with other projects. Gain portability, flexibility and binary compatibility)

General Pattern: (see image)

Allows binary compatible frontend classes

Even live backend switching will be possible: frontend is signaled to switch, frontend tells all frontend objects to save state and delete their backend object, then load the new backend and reload the state with the state objects.

The question that remains is: How should this all be impelemented?

Cons: BC concerns on the backend side, multiple inheritance, several pointers to the backend class, you cant have a qwidget in the inheritance tree

Cons: no compile time checking, requires more work for explaining backend writing (no interface classes to document with doxygen, slower method calls (invokeMethod() has overhead with string parsing/comparision)

Declaring and Implementing Q_INTERFACES

Uses the Q_DECLARE_INTERFACE makro.

The frontend class has a QObject * member m_iface pointing to the backend object. (image?)

To fix the current situation. In KDE3 we have:

This sucks becaue it is so diverse.

New use cases are now. bluetooth is already here, hardware is much more modular(pluggable) (usb/pcmcia,hotswap, etc.)

Solid will have several domains: hardware discovery, power & network managment.

And then solid has policy agents: knetworkmanager mediamanger already there. They are resoponsible to interact with the user and provide a library to access the hardware.

From solid's Point of View:

Domain specific interaction should be done in domain specifi code (playing music > phonon, printer > kdeprint, etc)

Hardware discovery (image)

Following some examples how easy it is to use it.

Powermanagement has one central class: PowerManager this takes care of all things related to powermanagment. (Throttle the CPU, darken the display, spin down the harddisk, etc)

It has useful signals signaling when the batterystate changed or something else

Hardware Discovery works in three levels:

Current state:

Hardware discovery and network management is almost done, a few features for system statistics, need more applications using it.

Policy agents: porting medianotifier, knetworkmanager and friends (target for the coding marathon)

Backend: everything required for linux like systems is done, more backends need to be written for other systems

(the developers perspective)

Core classes (image)

Phonon Audio player: A very easy to use class called AudioPlayer, that provides enough features to be used by Juk. It provides methods to play() a KURL or prelod a file and then play it back later with smaller latency. Pause() Stop() and Seek() methods provide all that is needed for basic audio playing support in applications.

Example: (image 2228)

The VideoPlayer class functions nearly the same, the only difference is, that it is a QWidget and you can embed it everywhere you like.

Example: (image 2229)

But modern audo players want more. (gapless playback, crossfades, equalizer, brightness controls, audio visualizations, etc).

For this use cases, the PhononMediaQueue comes into play. It allows to preload the next file (for crossfades) and use the Effects API to do other things.

Currently there are 3 and a half backend implementations: NMM, Xine, avKode and Fake.

NMM is the one which is likely to be most feature complete, because it is the only to provide recording for the AvCapture part of phonon. I also has already support to stream data over KIO so any valid KUrl may be used for media playback.

Xine is the one which gets out results really quickly, but will never be as complete since recording is missing completely. avKode was a Sommer of Code project and is based on ffmpeg and mencode(?)

In the following Matthias explained how the xine backend is implemented (as it is him who implements it) and shows the challenges he faced.

Todo list: (existing backends can only do simple playback, no more)

As you see, there is still lots of things to do, so please consider joining the efforts!

Personal Comment: I really appreciate the work the two guys do, and I am full of hope that for KDE4 the "plug and play" for hardware and audio will "just work", and provide a convienient, unified and straigth forward interface to the user.

This talk was held by Matthias Kretz (phonon) and Kevin Ottens (solid).

First the explained the common architecture which they both use. After that the explained solid and phonon further.

Common Achitecture Pattern

Requirements:

- Cross-project collaboration ("multimedia is not our business")

- Release cycles and Binary compatiblity (other projects work for fun to, dont want to force our own cycles and rules)

- Flexilibilty: provide choice to users and distrubuors, switch subsystems on the fly

- Portability: new porting concerns (non-free platform hint hint windows) allow to do it

Solution: Frontend/Backend split (Loose coupling with other projects. Gain portability, flexibility and binary compatibility)

General Pattern: (see image)

Allows binary compatible frontend classes

Even live backend switching will be possible: frontend is signaled to switch, frontend tells all frontend objects to save state and delete their backend object, then load the new backend and reload the state with the state objects.

The question that remains is: How should this all be impelemented?

Interface Based Approach

Pros: Enforce compile time checking, easy to document, fast method callsCons: BC concerns on the backend side, multiple inheritance, several pointers to the backend class, you cant have a qwidget in the inheritance tree

Introspection based Approach

Pros: No BC concnerns, no need to maintain two sets of classes (frontend & interfaces), free to partially implement a backend classCons: no compile time checking, requires more work for explaining backend writing (no interface classes to document with doxygen, slower method calls (invokeMethod() has overhead with string parsing/comparision)

Qt to the Rescue

(image)Declaring and Implementing Q_INTERFACES

Uses the Q_DECLARE_INTERFACE makro.

The frontend class has a QObject * member m_iface pointing to the backend object. (image?)

Solid

Why?To fix the current situation. In KDE3 we have:

- different hardware discovery (media manager, medianotifier)

- network managment (knetworkmanager, kwifimanager)

- power management (kpowersave, klaptop, sebas' powermanager...)

This sucks becaue it is so diverse.

New use cases are now. bluetooth is already here, hardware is much more modular(pluggable) (usb/pcmcia,hotswap, etc.)

Solid will have several domains: hardware discovery, power & network managment.

And then solid has policy agents: knetworkmanager mediamanger already there. They are resoponsible to interact with the user and provide a library to access the hardware.

From solid's Point of View:

- Report as many hardware facts as possible

- no device interaction

- storage is the only exception

Domain specific interaction should be done in domain specifi code (playing music > phonon, printer > kdeprint, etc)

Hardware discovery (image)

Following some examples how easy it is to use it.

Powermanagement has one central class: PowerManager this takes care of all things related to powermanagment. (Throttle the CPU, darken the display, spin down the harddisk, etc)

It has useful signals signaling when the batterystate changed or something else

Hardware Discovery works in three levels:

- First: DeviceManager, subsystem, device listing, creates Device

- Second: Device: Parent /Child relationship, basic informations, is factory for Capabilites

- Third. Capabalities, provide specific informations about the capabilities of a device

Current state:

Hardware discovery and network management is almost done, a few features for system statistics, need more applications using it.

Policy agents: porting medianotifier, knetworkmanager and friends (target for the coding marathon)

Backend: everything required for linux like systems is done, more backends need to be written for other systems

Phonon

Motivation: (the users perspective)- A user should be able to play back media without any configuration

- "Power users" want great flexibility

- additional multimedia hardware (think usb-headset) should be available to all applications without any further steps

- Users need to decide what device use for what purpose/program

(the developers perspective)

- Qt/KDE style api

- developers need APIs that are straight forward and easy to use

- applications need multimedia API which also works on completly different platforms (think MacOsX, Windows)

- ABI/API changes in multimedia framworks should not hinder KDE to use the newest mediaframework

Core classes (image)

Phonon Audio player: A very easy to use class called AudioPlayer, that provides enough features to be used by Juk. It provides methods to play() a KURL or prelod a file and then play it back later with smaller latency. Pause() Stop() and Seek() methods provide all that is needed for basic audio playing support in applications.

Example: (image 2228)

The VideoPlayer class functions nearly the same, the only difference is, that it is a QWidget and you can embed it everywhere you like.

Example: (image 2229)

But modern audo players want more. (gapless playback, crossfades, equalizer, brightness controls, audio visualizations, etc).

For this use cases, the PhononMediaQueue comes into play. It allows to preload the next file (for crossfades) and use the Effects API to do other things.

Currently there are 3 and a half backend implementations: NMM, Xine, avKode and Fake.

NMM is the one which is likely to be most feature complete, because it is the only to provide recording for the AvCapture part of phonon. I also has already support to stream data over KIO so any valid KUrl may be used for media playback.

Xine is the one which gets out results really quickly, but will never be as complete since recording is missing completely. avKode was a Sommer of Code project and is based on ffmpeg and mencode(?)

In the following Matthias explained how the xine backend is implemented (as it is him who implements it) and shows the challenges he faced.

Todo list: (existing backends can only do simple playback, no more)

- Implement Xine::ByteStream for KIO Urls

- Xine video breaks because it wants XThreads

- NMM nees a VideoWidget implementation

- AvCapture API needs to be finished implemented

- Effects (EQ, Fader, Compressor, etc)

- Phonon-Gstreamer anyone?

- KIO Seeking in MediaObject

- (good) user interface for phonon configuration

- Network API: VoIP

- DVD/TV support, chapters

- OSD (video overlay)

- device listing (how to get alsa device list?)

As you see, there is still lots of things to do, so please consider joining the efforts!

Personal Comment: I really appreciate the work the two guys do, and I am full of hope that for KDE4 the "plug and play" for hardware and audio will "just work", and provide a convienient, unified and straigth forward interface to the user.

aKademy 2006: Plasma - what the bleep is it?

Unfortunatly i have no transcript of this talk. - Instead of writing on the notebook, i filmed the whole talk. I hope to make the video available on tuesday evening for everyone to download.

(I know there will also be "official" videos, but perhaps i will be a little bit faster :-) )

(I know there will also be "official" videos, but perhaps i will be a little bit faster :-) )

aKademy 2006: QtDBus and interoperability

After the Keynote from Aaron to start the day, the first talk i attended was the talk from Thiago Maciera. He works at Trolltech and brought us the QtDBus bindings in Qt 4.2.

(unfortunatly i forgot to take some photos...)

The subtitle of the talk was: "How You Can Use D-Bus to Achieve Interoperability"

Here is what i wrote down while he talked:

He talks about what interoperability is: Two different sofwares working together

This talk is mainly about inter-process interoperability.

D-Bus is an Inter-Process Comunication / Remote Procedure (IPC/RPC) system defined by freedesktop.org

D-Bus works like a star formation with one deamon in the middle connecting the different applications together.

He explains what interoperability is good for: another method to access your technology. This brings: broader user-base, less resource usage on the system because of reuse, good PR because you are the nice guy :-)

But even if your application has no distinctive features, then interoperability provides you the ability to access other applications features etc.!

Interoperability example:

The Desktop API (DAPI)

Allows to access desktop functionality like sending email, turning on/off screensavers, opening urls etc.

DAPI consists of two components: A daemon and a library that links to the application.

To use this (interoperability) you have to define an API, and define a format for the information exchange.

No problem with linking, easy access from any language or shell

Requires re-parsing of data, performance penalty of starting new processes every time

But: requires establishing and testing the new protocol, requires the protocol to be established on each participant, difficult to add improvements, difficult to access from shell scripts.

No problem with linking, no penalty with process starting, easy to connect from any language, command-line access tool is possible.

But: access from any language is limited by the availabliity of implementations, DCOP suffers form forward compatibility and is hard to extend.

In addition:

To use it, you have not much to do. The KDE buildsystem (CMake) can output the code for you, needed to use it.

To export functionality:

(unfortunatly i forgot to take some photos...)

The subtitle of the talk was: "How You Can Use D-Bus to Achieve Interoperability"

Here is what i wrote down while he talked:

He talks about what interoperability is: Two different sofwares working together

This talk is mainly about inter-process interoperability.

D-Bus is an Inter-Process Comunication / Remote Procedure (IPC/RPC) system defined by freedesktop.org

"D-Bus is a message bus system, a simple way for application to talk to one another"(citation from freedesktop.org)

D-Bus works like a star formation with one deamon in the middle connecting the different applications together.

He explains what interoperability is good for: another method to access your technology. This brings: broader user-base, less resource usage on the system because of reuse, good PR because you are the nice guy :-)

But even if your application has no distinctive features, then interoperability provides you the ability to access other applications features etc.!

Interoperability example:

The Desktop API (DAPI)

Allows to access desktop functionality like sending email, turning on/off screensavers, opening urls etc.

DAPI consists of two components: A daemon and a library that links to the application.

To use this (interoperability) you have to define an API, and define a format for the information exchange.

First solution: write a library

Has positive (fast, little modification) and negative (if you use c++ you are tight to it, if you use Qt and KDE the other have to use it also, scripting languages have problems using the library) aspects.Second solution: write an external application

(Like xdg-utils)No problem with linking, easy access from any language or shell

Requires re-parsing of data, performance penalty of starting new processes every time

Third solution: socket, pipe or another raw IPC system

Solves the problem with linking, no penaltiy with process starting, easy to access from any language.But: requires establishing and testing the new protocol, requires the protocol to be established on each participant, difficult to add improvements, difficult to access from shell scripts.

Fourth solution: other IPC/RPC systems

Instead of defining own IPC/RPC system, use existing solutions like DCOPNo problem with linking, no penalty with process starting, easy to connect from any language, command-line access tool is possible.

But: access from any language is limited by the availabliity of implementations, DCOP suffers form forward compatibility and is hard to extend.

Final solution: D-Bus

Has been modelled after DCOP and so has all of its benifits.In addition:

- It has been designed with forward compatibility with future extensions in mind

- Many bindings already available: glib, Mono, Python, Perl, Java

- Works on all platforms KDE supports (work on Windows ongoing)

- Best of all: it is nativiely supported by Qt and KDE

- its the IPC/RPC system that we chose to replace DCOP

- its beend designed to replace DCOP (concepts are very similar)

- it integrates nicely with the Qt Meta Object and Meta Type Systems

To use it, you have not much to do. The KDE buildsystem (CMake) can output the code for you, needed to use it.

To export functionality:

- exporting from QObject derived classes is easy, allowing any slot to be called on it

- better ist to use a class derived from QDBusAbstractAdaptor which just exports the functionality you want to export

- process XML definition of an interface into a C++ class derived from QDBusAbstractAdaptor (this way you can easily implement an interface from third parties)

Saturday, September 23, 2006

aKademy 06: Finally internet!

Obviously i lost the whole post when clicking on "Preview" in Konqueror! :-( Bah!

Time to fetch a pizza now, i will have internet again tomorrow and will post some transcripts and photos of talks...

Time to fetch a pizza now, i will have internet again tomorrow and will post some transcripts and photos of talks...

Thursday, September 21, 2006

Im going to Akademy 2006!

Yes, this year its the first time where I will attend Akademy, the anual KDE Conference.

This year it will be held in Dublin, Irland. My flight will be tomorrow at 16:20 from Frankfurt.

Im very happy to take part in this event, and I hope that this will be the start of me hacking on KDE... - I already have some Ideas in mind on how to contribute to a great KDE4. :-)

Im also eager awaiting the cool talks this year, and i hope to take some photos and create some transcripts of the talks i attend.

Here is a preliminary list of talks i plan to attend:

Saturday:

Sunday:

I hope to be able to give some brief transcripts of all this talks, so if you are interested in any of them and cannot attend, stay tuned!

This year it will be held in Dublin, Irland. My flight will be tomorrow at 16:20 from Frankfurt.

Im very happy to take part in this event, and I hope that this will be the start of me hacking on KDE... - I already have some Ideas in mind on how to contribute to a great KDE4. :-)

Im also eager awaiting the cool talks this year, and i hope to take some photos and create some transcripts of the talks i attend.

Here is a preliminary list of talks i plan to attend:

Saturday:

- QtDBus and interoperability

- Plasma: What the bleep is it?

- Phonons in Solids - Internals and Usage Explained

- Competition And Cooperation - An Honest Look At The Dynamics Between The KDE And GNOME Communities

- Multi-Head RandR

- Akonadi - The KDE 4.0 PIM Framework

- Decibel - You Are Not Alone!

- State of KHTML

Sunday:

- KDE 4 Development Setup

- Performance techniques

- An Introduction to CMake, CTest and Dart

- iQyoto/Kimono C# bindings

- Kickoff - Start Menu Research

- Accomplishments And Challenges Of The KDevelop Team

I hope to be able to give some brief transcripts of all this talks, so if you are interested in any of them and cannot attend, stay tuned!